I recently read The Great Leveler by Walter Scheidel, an academic historian and social scientist. This is a book about the rise of inequality in human society, and about the ways in which it has been reduced historically – which is, unfortunately, always through mass violence. The book appeared on my radar because it comes up in generational theory discussions online, and in fact is referenced in Neil Howe’s book, The Fourth Turning Is Here (I should know, as I worked on the bibliography and end notes). I was curious to learn how Scheidel’s study might relate to the historical cycles in generational theory. A big open question is: now that we are in a Fourth Turning, or Crisis Era, is some kind of leveling event on the horizon?

First, a review of the book.

Scheidel identifies four different kinds of violent ruptures which reduce inequality, and calls them the “Four Horsemen of Leveling.” They are: mass mobilization warfare, transformative revolution, state failure, and lethal pandemics. In his book, each horseman gets its own section with a few chapters. There’s also a section introducing the concept of inequality, and some final sections of analysis, plus a technical appendix.

This book is a heavy read, written with academic precision. Scheidel wastes no words, such that each of his paragraphs is replete with meaning. Sometimes I had to reread them to be sure I had caught every nuance. Nonetheless, his writing style is engaging enough that it carried me through the over 400 pages of detailed historical analysis. I was never bored, in other words.

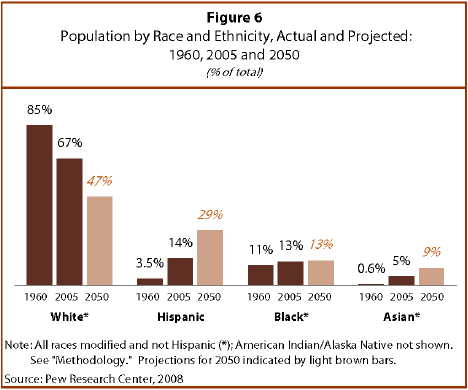

The scope of Scheidel’s analysis is all of human history, and he even speculates on inequality in prehistory (he argues that it can be measured using burial sites, with evident nutritional health as a proxy for wealth and status). His overall conclusion about wealth and income inequality is that it is always present to some degree, and always grows in any stable and economically complex society. Basically, once you get civilization, with its ability to generate surplus wealth, an elite class will inevitably emerge, claim an unequal proportion of that wealth, and tenaciously hold onto it.

As he goes through the “horsemen of leveling” in each of their sections, Scheidel looks at specific occurrences across the world and the centuries, going into detail of just what they accomplished as they trampled through history. He uses a variety of measures of inequality, including the well known Gini coefficient, and proportions of wealth owned by the upper economic classes. A generous supply of charts and graphs complement the text.

Scheidel acknowledges that for much of the historical past, there is limited data with which to work. It’s easier to look at the modern period, with its ample records generated by the fiscal administrative state. So, for the distant past, much of his analysis is speculative. This is a common enough problem when historians attempt to apply a thesis across the entire breadth of human history.

One thing that is striking about Scheidel’s review of history vis-à-vis inequality is how rare leveling events of any significance are. This is the reason, I suppose, for the persevering aptness of the saying “the rich get richer and the poor get poorer.” In one graph of the long term trend for Europe – covering the past two millennia – there are only three events that produce significant, persistent leveling: the collapse of the Roman Empire, the “Black Death” bubonic plague pandemic of the late Middle Ages, and the so-called “Great Compression” that occurred in the World War era and birthed the modern-day middle class (now eroding away as inequality reasserts itself).

Those events cover three of the four horsemen. The fourth, transformative revolution, manifested in the Communist Revolutions in Russia and China in the first half of the twentieth century. But these also are distinct and rare examples where an event (revolution) produced persistent leveling. Notably, the American and French revolutions of the late eighteenth century did not. Scheidel argues that this is because effecting significant transformative change required the vast industrial economies of modern times, which earlier revolts and revolutions lacked.

In addition to mass violence events that persistently reduce inequality being rare, it is also the case that inequality eventually returns, as societies stabilize upon recovering from these events. This has been the story of the latter decades of the postwar era in which we currently live, during which all the leveling caused by the World Wars has pretty much reversed, and inequality is returning to what it was in the Gilded Age.

In the last chapters of the book, Scheidel examines the potential leveling effect from factors other than mass violence, such as progressive tax structures or social welfare, and concludes that they have only modest impact. He also speculates on the possibility of the horsemen returning, suggesting that this is unlikely. Modern civilization is complex and robust, with little chance of systemic collapse or revolution from below. Warfare has become hi-tech, precluding the need for mass mobilization. And with modern medicine, even plague has lost its power, as we saw with the Covid pandemic (which happened after the book’s publication).

It would seem that the only potential mass violence event that could erase inequality in our near future would be an all-out global thermonuclear war. As with historical instances of far-reaching violent ruptures, this would achieve leveling simply by destroying vast amounts of property and killing vast numbers of people. One must wonder, then, if inequality isn’t tolerable, given the drastically negative alternative. This is a somewhat depressing conclusion, which even the author himself acknowledges.

If there is any glimmer of hope in this book, it lies buried in the statistics. Redistributive policies are shown to have a greater effect on inequality of disposable income than on inequality of market income. In other words, they ease the burden of the cost of living, even if they can’t stop elites in the upper brackets from hoarding wealth in nominal terms. Better to have inequality but without immiseration, if nothing else.

In the appendix, there is some technical discusssion about a measurement called the “extraction rate.” This is Gini divided by its maximum possible value, and thus a measure of how close a society is to achieving maximum possible inequality. What is found is that the rate gets close to 100% in simpler, pre-modern societies, but that it is attenuated in the modern age, with its more complex economies and its higher expectations of what constitutes an acceptable quality of life.

The attenuation of the extraction rate is the one way that economic development and growth could be said to be a “rising tide that lifts all boats,” even though the wealthy benefit far more from a stable, growing society than the rest of us do. Yes, we ordinary folks are peasants compared to the likes of Elon Musk, but we still enjoy a standard of living that is much better than that of most of humanity that came before us. For that, I suppose, we should be grateful, and not be wishing for the return of the horsemen and some sort of disruptive leveling event.

Unless, of course, you’re eager to scrabble for survival in a post-apocalyptic radioactive wasteland.

Next, some more thoughts on Scheidel’s study, including how it relates to the question I posed above about the Fourth Turning.

In his introduction, Scheidel emphasizes that his thesis is that mass violence events reduce inequality, not that inequality necessarily leads to mass violence. And while he doesn’t mention it in the introduction, it emerges later in the text that mass violence isn’t guaranteed to lead to leveling – it’s just that when leveling occurs, it is always because of a preceding mass violence event. These are important logical distinctions!

Turnings theory predicts that there will be some kind of disruption at the end of the saecular cycle, based on generational drivers. While this doesn’t have to involve mass violence, the likelihood of that occurring does increase in the Fourth and final Turning of the cycle. That’s because, in the Fourth Turning, society acts with a sense of urgency in the face of the problems that beset it, and is open to drastic action.

It could be the case that wealth inequality is one of these problems, but it could be something else instead. So Turnings theory is in accord with Scheidel: inequality per se is not necessarily what will lead to drastic social action, which might include mass violence. Though one could argue that even if wealth inequality isn’t a proximate cause of social upheaval, it could be an ultimate cause, through its relation to other social factors – for example, through its corrosive effect on social trust, making it easier for leaders to foment division. In other words, inequality could be understood as symptomatic of a general break down of the social order.

When we look at historical Fourth Turnings, the event that seems most like a social crisis precipitated by inequality is the French Revolution. But here, Scheidel is clear in his analysis. However historically momentous the event might have been, it didn’t have much effect on wealth inequality. I have written about the French Revolution before, in another book review. What I learned from the book I read is that the impetus for the Revolution was not merely that the poor peasantry of France was oppressed; there was a drive for change up and down the social scale, coming out of the political philosophies of the Enlightenment. It was a transformative revolution, no doubt, but it wasn’t a leveling event.

The point is, the cataclysmic events of a Fourth Turning will certainly transform the civic order, but there is no guarantee that this will result in a more equal society afterwards. Take the American Civil War – arguably the most destructive war the U.S. has fought, certainly so if measured strictly by total casualties. Afterwards came the Gilded Age, renowned for its wealth inequality. While the Civil War was in some ways a modern war of mass mobilization, featuring conscription and industrial-scale combat, in its outcome it was more like a traditional war where one elite (Northern industrialists) becomes enriched at the expense of another (Southern planters). This is Scheidel’s conclusion, anyway.

Scheidel might dismiss events like the American Revoluition or American Civil War for not meeting the criteria to be considered “great levelers,” but in my opinion this simply exposes a limitation of his approach. These were clearly hugely signicifant events historically, because they transformed the political order, indeed the very identity of the nation. But this can’t be captured by measuring income and wealth shares and ratios. Those graphs might look pretty steady within the timeframe of these events, but that’s because they simply measure a material fact, whereas human history and the human experience are more than a material phenomenon. They involve ideas and passions, which are never going to be visible in a coefficient based on monetary values.

Now, in the World War era, when mass mobilization warfare did achieve leveling, it was in part because of the accompanying physical destruction and the ruination of elites, but also because mobilizing the masses required elevating them materially. It wasn’t strictly the violence of war that produced leveling; it was to a great degree the policies that came about because of the needs of war. For example, the Japanese government enforced high rates of taxation to support their war effort, effectively redistributing wealth from the very rich. Non-belligerents in both world wars (such as Switzerland and Sweden) were affected by the need to mobilize and experienced leveling, even though they didn’t fight. Democratization, unionization, and the social welfare state all came out of mass mobilization for the world wars.

This observation reminds me of the famous essay by William James, The Moral Equivalent of War, written just before World War I. James gets that war, while brutal and atrocious, also galvanizes a society toward achieving a common purpose. He speculates on whether it would be possible to harness that dynamic to some purpose other than militaristic destruction; he suggests infrastructure-building projects (he calls it an “army enlisted against Nature“). Interestingly, his idea aniticpated the organized labor corps of the later New Deal era in the United States.

Could something like that be done today, so we don’t have to start World War III just to get to another Golden Age? What William James misses in his essay is that in order to muster the social will to fight a war, or its equivalent, there has to be a sense of emergency – a sense that the nation faces high stakes. This was provided in the 1930s by the Great Depression and the rise of the Axis powers. What could provide it today – and what could provide a sense of emergency that’s not a military conflict? Climate change, maybe? There is not a good record of a society-wide willingness to face the realities of climate change, but here Nature might force our hand.

To conclude, and reiterate points already made, Turnings theory and Scheidel’s study of economic leveling teach some of the same lessons. While it is true that crisis conflicts involving mass violence can result in a more economically equal society, there is no guarantee that they will. Nor is there any reason to predict that the social tensions created by inequality will necessarily lead to violence, and given the former lesson, it’s hardly something to wish for.

One last point. In Scheidel’s first chapters, where he discusses inequality in general, it’s notable that he argues that the tendency for a stable society to gravitate towards states of material inequality is not tied to any particular economic system. In other words, it’s not specifically a fault with free-market capitalism, our current system. It’s a fault with human nature, and all civilized societies face the issue.

That’s not to say we shouldn’t critique capitalism, just that we can’t exclusively blame it for inequality and expect that jettisoning it as a system (were that even possible) would lead to a more equal society. The lessons of the Communist revolutions are plain. I do think that baking wealth redistribution into a market-capitalist system makes sense, as argued earlier, because it improves quality of life for the masses, even as the Gini curve keeps pushing the asymptote toward the maximum possible extraction rate. In my mind, that’s a good reason to continue supporting progressive causes, rather than simply hoping that the cycles of history will take care of our problems for us.

An abridged version of this post appears as my review of the book on goodreads.